Informatica Error Handling Best Practices

But since today morning the session is getting failed stating ERROR: Failed to ERROR 1 LM36320 Session task instance (smXXXX): Execution failed. I finished installation of informatica 901 on Solaris sparc 64 bit.

Informatica Error Handling Best Practices For Students

Before we learn anything about ETL Testing its important to learn about Business Intelligence and Dataware. Let’s get started –What is BI?Business Intelligence is the process of collecting raw data or business data and turning it into information that is useful and more meaningful. The raw data is the records of the daily transaction of an organization such as interactions with customers, administration of finance, and management of employee and so on. These data’s will be used for “Reporting, Analysis, Data mining, Data quality and Interpretation, Predictive Analysis”.What is Data Warehouse?A data warehouse is a database that is designed for query and analysis rather than for transaction processing. The data warehouse is constructed by integrating the data from multiple heterogeneous sources.It enables the company or organization to consolidate data from several sources and separates analysis workload from transaction workload.

Data is turned into high quality information to meet all enterprise reporting requirements for all levels of users.What is ETL?ETL stands for Extract-Transform-Load and it is a process of how data is loaded from the source system to the data warehouse. Data is extracted from an OLTP database, transformed to match the data warehouse schema and loaded into the data warehouse database. Many data warehouses also incorporate data from non-OLTP systems such as text files, legacy systems and spreadsheets.Let see how it worksFor example, there is a retail store which has different departments like sales, marketing, logistics etc. Each of them is handling the customer information independently, and the way they store that data is quite different. The sales department have stored it by customer’s name, while marketing department by customer id.Now if they want to check the history of the customer and want to know what the different products he/she bought owing to different marketing campaigns; it would be very tedious.The solution is to use a Datawarehouse to store information from different sources in a uniform structure using ETL.

ETL can transform dissimilar data sets into an unified structure.Later use BI tools to derive meaningful insights and reports from this data.The following diagram gives you the ROAD MAP of the ETL process. Extract.Extract relevant data. Transform.Transform data to DW (Data Warehouse) format.Build keys - A key is one or more data attributes that uniquely identify an entity. Various types of keys are primary key, alternate key, foreign key, composite key, surrogate key. The datawarehouse owns these keys and never allows any other entity to assign them.Cleansing of data:After the data is extracted, it will move into the next phase, of cleaning and conforming of data. Cleaning does the omission in the data as well as identifying and fixing the errors. Conforming means resolving the conflicts between those data’s that is incompatible, so that they can be used in an enterprise data warehouse.

In addition to these, this system creates meta-data that is used to diagnose source system problems and improves data quality. Load.Load data into DW ( Data Warehouse).Build aggregates - Creating an aggregate is summarizing and storing data which is available in fact table in order to improve the performance of end-user queries.What is ETL Testing?ETL testing is done to ensure that the data that has been loaded from a source to the destination after business transformation is accurate.

It also involves the verification of data at various middle stages that are being used between source and destination. ETL stands for Extract-Transform-Load.ETL Testing ProcessSimilar to other Testing Process, ETL also go through different phases. The different phases of ETL testing process is as followsETL testing is performed in five stages.Identifying data sources and requirements.Data acquisition.Implement business logics and dimensional Modelling.Build and populate data.Build ReportsTypes of ETL TestingTypes Of TestingTesting ProcessProduction Validation Testing“Table balancing” or “production reconciliation” this type of ETL testing is done on data as it is being moved into production systems. To support your business decision, the data in your production systems has to be in the correct order. Data Validation Option provides the ETL testing automation and management capabilities to ensure that production systems are not compromised by the data.Source to Target Testing (Validation Testing)Such type of testing is carried out to validate whether the data values transformed are the expected data values.Application UpgradesSuch type of ETL testing can be automatically generated, saving substantial test development time. This type of testing checks whether the data extracted from an older application or repository are exactly same as the data in a repository or new application.Metadata TestingMetadata testing includes testing of data type check, data length check and index/constraint check.Data Completeness TestingTo verify that all the expected data is loaded in target from the source, data completeness testing is done. Some of the tests that can be run are compare and validate counts, aggregates and actual data between the source and target for columns with simple transformation or no transformation.Data Accuracy TestingThis testing is done to ensure that the data is accurately loaded and transformed as expected.Data Transformation TestingTesting data transformation is done as in many cases it cannot be achieved by writing one sourcequery and comparing the output with the target. Multiple SQL queries may need to be run for each row to verify the transformation rules.Data Quality TestingData Quality Tests includes syntax and reference tests.

In order to avoid any error due to date or order number during business process Data Quality testing is done.Syntax Tests: It will report dirty data, based on invalid characters, character pattern, incorrect upper or lower case order etc.Reference Tests: It will check the data according to the data model. For example: Customer IDData quality testing includes number check, date check, precision check, data check, null check etc.Incremental ETL testingThis testing is done to check the data integrity of old and new data with the addition of new data.

Incremental testing verifies that the inserts and updates are getting processed as expected during incremental ETL process.GUI/Navigation TestingThis testing is done to check the navigation or GUI aspects of the front end reports.How to create ETL Test CaseETL testing is a concept which can be applied to different tools and databases in information management industry. The objective of ETL testing is to assure that the data that has been loaded from a source to destination after business transformation is accurate. It also involves the verification of data at various middle stages that are being used between source and destination.While performing ETL testing, two documents that will always be used by an ETL tester are. ETL mapping sheets:An ETL mapping sheets contain all the information of source and destination tables including each and every column and their look-up in reference tables. An ETL testers need to be comfortable with SQL queries as ETL testing may involve writing big queries with multiple joins to validate data at any stage of ETL. ETL mapping sheets provide a significant help while writing queries for data verification. DB Schema of Source, Target: It should be kept handy to verify any detail in mapping sheets.ETL Test Scenarios and Test Cases.

Test ScenarioTest CasesMapping doc validationVerify mapping doc whether corresponding ETL information is provided or not. Automation of ETL TestingThe general methodology of ETL testing is to use SQL scripting or do “eyeballing” of data. These approaches to ETL testing are time-consuming, error-prone and seldom provide complete test coverage.

To accelerate, improve coverage, reduce costs, improvedetection ration of ETL testing in production and development environments, automation is the need of the hour.

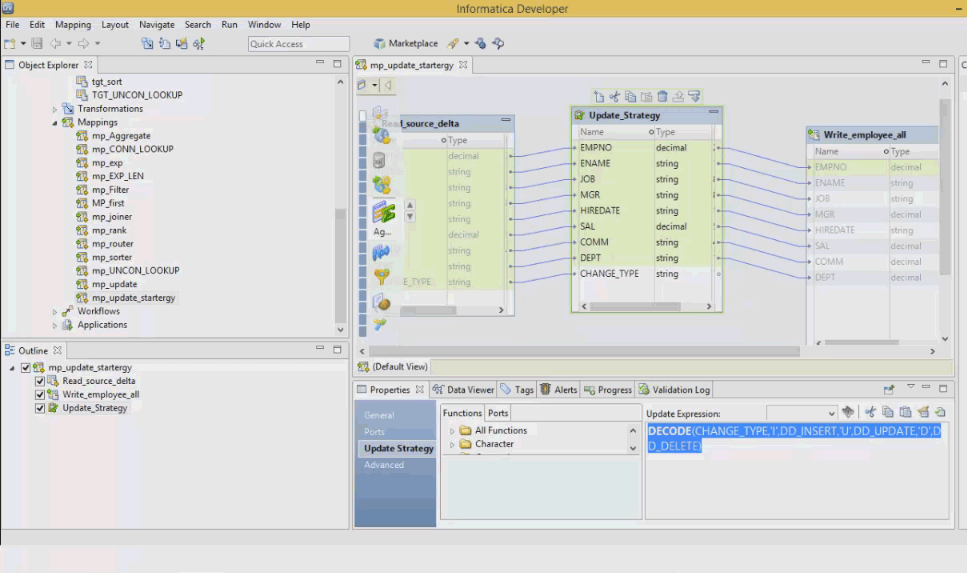

Hi All,We have a business requirement to do a detail error handling on source data. If a source record violates data integrity rules then that has to be loaded into an error logging table.We did this using router transformation.Now the user is saying they need to know which column or combination of columns violated data integrity rules, so we have to put some error message accordingly in the error logging table in another column. The contents of the erroneous record are to be put into one column in the same table and in the same record.What is the best practice for doing this?Thanks in advanceJohn-Smith Long. HiI think Varghese is on the right track. Global IT Solutions (GITS) has had a lot of success in the Data Quality realm by taking a mulit-faceted approach when it comes to Data Quality.Set Upa) Identify the degree of importance and subsequent action data item/error type. For instance, if you may issue a warning for some fk-attributes that do not have parents and reject records for others. It is interesting to note that the 'type' of data errors is not very high, so this tends not to be a huge exercise.

Moreover, each ETL data - error can usually be categorized into a meaningful error-type-category (e.g., Missing Parent, Data Type Mismatch, etc)Run TimeWhen an error occurs, the cost-effective method of handling DQ is to capture the error type, column name, record# in the file, user who last updated the record, etc in a custom error structure. This is 'outside of the box' programming. By default, most programmers simply trap the error in an error log or track whether all the records loaded properly. Subsequently, users have to manually read through logs and figure out what went wrong.GITS recommends a different approach. A few additional DQ structures are used to track the type of errors, and info associated with the File and who should be notified. The interesting thing is, the DQ Code is re-usable, so you write it once and paste into your ETL code across applications, you also include these error DQ structures in addition to your normal Error LogPost-Mortem DQ ManagementThe goal of DQ should always be to improve over time.

If you capture errors in a smart fashion, generating reports that capture metrics associated with errors is child's play.Google William Moore, Data Architect if you need more info on this approach. Our website provides a high level description of this methodology.RegardsWilliam.